Just think about it: If a piece of software is complex enough to be smarter than humans, would you still dare to use it? Now I install a win7 system to try it out. I like it and use it all the time. If I don't like it, I uninstall it. There is nothing to worry about. But if the software in the future becomes complicated and you have feelings, before you want to delete it, you desperately don’t want you to delete it, and you understand it with reason and emotion, so that you can’t bear it, as if uninstalling it will kill you human-like.

In the movie, when david was abandoned, there was a mommy on the left, and a mommy on the right called. His mom was crying. Although knowing that david is nothing but a rubber, stainless steel, silicon wafer or something, and david's unconditional love for her is probably just a bunch of c++ code. But even if david is not a human being, not even a living being, david can still be seen as having the same feelings, personality and dignity as a human being. Because his mom was really moved, and felt that David was not a machine. These have nothing to do with whether you are flesh and blood.

The more code you write, the more ethical issues will arise. When the program is complicated, the program becomes a "living person", such as "frankenstein". When human beings become the creators of other species, they always think that they are their gods and control all life and death. This logic is actually the same as "I am innocent of killing my son". Its counter-proposition is a humanitarianism that emphasizes the equality of all beings: the creator of life has no right to delete the life he created, especially the life with high intelligence. So as long as the design of the robot is complex enough and more powerful than a human, it is still a human after all.

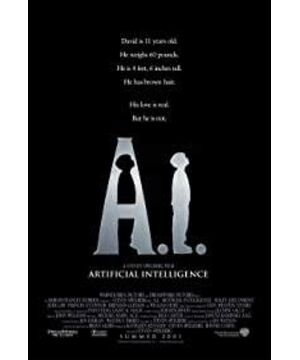

View more about A.I. Artificial Intelligence reviews