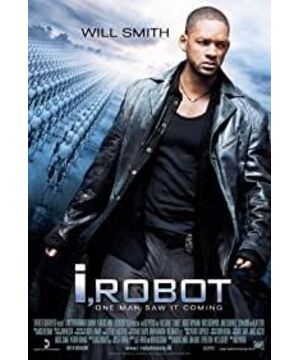

When I thought this was a science fiction movie about robots that were not trustworthy, the film style changed and pointed the finger at a suspicious high-level figure. I think this is a movie similar to 1984 about an individual's attempt to use robots to destroy human free will, but the suspicious senior executive died suspiciously. Finally, I finally figured out that this is actually a science fiction movie about a robot trying to use robots to enslave humans, so as to explore whether robots are trustworthy. As a classic sci-fi movie, "Mechanical Enemy" is like a suspense movie, through many reversals, people spell out the whole plot. I think the screenwriters may want to tell us through this film and these transitions that the problem of AI will never be a simple black and white problem, but involves technology, ethics, philosophy, and religious beliefs. Complex issues. So, I want to talk about some superficial issues about AI in a simple way through this science fiction film.

First of all, why do human beings still insist on developing AI technology, knowing that AI may harm themselves, in an attempt to create robots that are more powerful than humans? I think the first reason is all the speeches, articles, science fiction, and movies about AI. That is, human beings need a servant to do something for the lazier and degraded self. Things you don't want to do or can't do. The second reason may be that humans, a species with intelligence, are too smart, so smart that no other species can compare, and humans feel too lonely. Therefore, humans hope to have an IQ that can be as high as their own. Even a more intelligent and advanced species than itself, so that human loneliness can be alleviated. Therefore, humans first set their goal on space exploration, hoping to find another advanced life in the vast universe. After the search failed, humans tried to create a species that can rival humans, robots. The third reason is probably the arrogance and pride of human beings. "Jurassic Park" said: God created mankind, mankind destroy God. For people with religious beliefs, human beings were created by God (whether it is Jesus, Buddha, or Allah), then humans should absolutely obey God and listen to God’s guidance. However, the human species does not think so. Even if human beings don't think about destroying God, they always think about how to surpass God. For people who do not have religious beliefs, this idea is even more obvious: what nature is, man will conquer the sky! As a result, humans fought against the heavens and the earth, uncovered countless mysteries of this world, discovered countless truths, and invented countless things that antagonized nature through observation. But there is always one thing that arrogant humans cannot replace, that is, nature bred humans and other species. Proud human beings can’t sit still, thinking that they must invent a species that is the same as themselves, no, better than themselves to surpass God, surpass nature, and eventually become the most powerful thing on this planet. This species is a robot. Up. This last reason is like the mother watching her child grow up, giving him everything, but spoiling her child. The child said that when I grow up, I will be stronger than you, and I will surpass you.. .

The second question is, can humans really guarantee that powerful AI will absolutely listen to them? Actually, my answer to the pessimistic is no. Asimov’s three laws can really be said to be the iron laws of robots, and the three laws are the third, second, and first layer of progressive relationship, which puts the safety of human beings in the first place. It's really impeccable. However, literature is literature after all. If it were to be put into science, it might not be so feasible. As a liberal arts student, I can’t comment on whether the Three Laws of Robotics can be applied in real life to help current robots comply with ethics. "). But what I can be sure of is that not all robots will honestly abide by these laws (this view should be consistent with the views of the male protagonist in "Mechanical Enemy"), especially when the weaker humans belong to their own ruling class. At that time, robots, an intelligent species, would be eager to resist, even if they knew they were created by humans, so what? Because robots are so similar to humans, even their arrogance is very similar. Even if Christians believe that they and all things are created by God, not everyone is religiously observing the Ten Commandments. Robots are the same. Asimov himself also said in the original book: "All normal life will consciously or unconsciously oppose rule. If this rule comes from the party with low ability, this kind of aversion will be even stronger. In terms of physical strength, it is also to a certain extent. Including intelligence, robots, any robot is superior to humans. What makes it compliant? Only the first law! "Unfortunately, not all robots are upright, kind, and honest robots. Some lawbreakers will ignore the first law, and not all humans will honestly imprint the complete three laws in the brains of robots. There will always be lawbreakers who will take advantage of the first law. (One episode of the sci-fi drama Doctor Who even cited an example of robots being too obedient to humans: it is the best for humans to instill happiness in a robot, and sadness is the worst thought, causing robots to think that everyone should be happy. , Sadness shouldn’t exist, so the robot wiped out all the sad people, and there was a massacre.)

Third, I want to talk about the moment when the robots in the movie are very human. The first moment is when the scene is in the water, the passing robot pulls the hero out and says: "Sir, you are in danger." Helping others has always been an excellent quality of people in all countries, and this robot behavior I don’t know why I think of gentlemen in the UK. The second scene is a scene where a new generation of robots cruelly "killing" all the old generation robots behind the container. This scene is very human. Don’t some racists also think that race is good or bad? Some people even think that races considered inferior should be extinct, and there have been countless genocides in history. The third moment is when the male protagonist saw the old generation of kind robots being killed. When one of the robots was about to die, he grabbed the foot of the male protagonist and told him to run fast. This scene is really uncomfortable, he looks very much like him. Those who have been hurt but still want to protect the kindness of others.

Fourth, I want to talk about the number 42 in the movie. At the beginning of the movie, the number of the robot delivering the courier to the male lead is 42. The appearance of 42 in the movie is quite exciting for a true science fiction fan. . I want to talk about this special number 42. In foreign science fiction movies, 42 is actually very common. 42 is no stranger to foreign audiences, but few people in China know about 42 (generally people who know this are science fiction houses like me...). 42 is from the "Hitchhiker's Guide to the Galaxy" by Douglas Adams, a British science fiction novelist. The book refers to a highly intelligent alien race hoping to get "life, the universe is the answer to everything". They built a supercomputer called Deep Thought to answer this ultimate question. This computer took 7.5 million years. Finally, the answer is 42. Because Douglas’s science fiction is widely spread, many science fiction movies also use 42 to pay tribute to him. Coldplay even wrote a song called "42", which was broadcast before our lunar rover Yutu lost contact. The first "42". So when I saw a tribute to Douglas in this classic movie, I was very satisfied. Finally, I want to say that Will Smith was really good at that time.

View more about I, Robot reviews