We first have a basic assumption that artificial intelligence will cause unpredictable catastrophic consequences. This is an inevitable word. Then the movie actually provides a number of accidents that allow humans to survive this disaster. One of the most important assumptions is that there is a role like a prophet (father of robots), who foretells such a disaster and is even willing to sacrifice his life to provide the male protagonist with a clue that may be ignored, and the male protagonist He was smart enough to follow the picture and finally saved mankind, not to mention other hardware coincidences such as unsurveilled underground passages and the final halo of the protagonist of the fierce battle. The coincidences required in these movies are difficult to happen at the same time in reality, that is, If disasters are inevitable, then the way out for mankind is extremely slim.

Secondly, I want to discuss the three laws themselves. The absurd consequences derived from the so-called three laws are actually inherent contradictions in human society. Human beings as a whole and each individual of human beings cannot be unified at all, so there will be "without violating the previous laws" before the law. But why the human as a whole is the highest priority? In fact, this has already made a choice (collective), and the legitimacy of this choice is completely questionable. At least, the contradiction between it and the individual is obvious. However, in real life, this contradiction has not caused the devastating consequences shown in the movie (it can even be said that it has already occurred, such as the Jewish Holocaust), and artificial intelligence only manifests this contradiction. And this apparent dynamic mechanism is to completely rationalize violence. This rationalization process can expose human irrational contradictions and trigger ethical issues that robots cannot understand. This is similar to Bowman’s argument. .

In addition, as a non-computer and non-philosophical student, I am trying to discuss language here. Take the first law as an example. Sometimes human beings are doing bad things with good intentions. It is difficult to quantitatively judge what is "harm". So how can robots be able to make accurate judgments? The so-called three laws are the complete description of the world schema with limited language (regardless of simplicity) of human fantasy. However, there are still questions about the correspondence between "things" and "words". How can we use simple laws to regulate all the behaviors of artificial intelligence within human expectations? We can say that the three laws themselves are not contradictory to each other, but according to Godel's extremely powerful theorem, there are bound to be some behaviors beyond the system delineated by the three laws that cannot be proved or falsified. So even if I don’t hold a negative attitude towards artificial intelligence, I don’t think it can solve this contradiction.

In contrast to the human world, there are many "unspeakable" "most important things", such as the irrational choice of emotional factors in the film as a clue (the male protagonist’s choice of 7%, and finally Sandy’s choice of the female protagonist ), this may be the unique, unrepeatable, and most dignified part of human beings.

Finally, let me spit out, why does the thing that the robot formatted looks like a chemical reagent! ! ! Do you want to be so weird! ! !

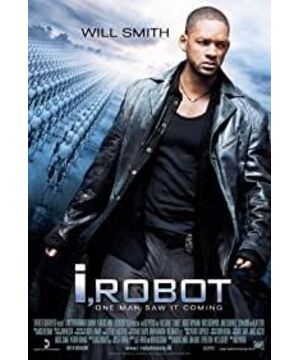

View more about I, Robot reviews