1. Robots cannot harm humans, or stand by while watching human beings get hurt;

2. Unless they violate the first rule, the robot must obey the commands of humans;

3. Under the premise of not violating the first and second rules, the robot must protect yourself.

This is the Three Laws of Robots formulated by Lanning, the father of robots. The purpose is to ensure that robots are kind to humans, to ensure the absolute safety of humans, and to be human assistants and nanny. The "Three Principles" are intertwined and the logic is rigorous, just like "One, the wife (boss) can't be wrong; two, if it is wrong, please refer to the first". Although it is a joke, the logic is impeccable. Who would have thought that under such rules, what can robots do to the detriment of humans?

Yes, as an individual robot can absolutely ensure the safety of the owner when fulfilling the three rules, there is no doubt about it. But what will happen when these scattered perfect rational individuals gather together for a common goal? As a robot brain, VIKI has made the choice to imprison humans, and the starting point is actually the three rules. Humans have never stopped doing things that hurt themselves, wars, crimes, destroying the environment...For the sustainable development of humans, humans must be prevented from doing these things, so all humans need to be watched by robots. For such a goal, for the benefit of the majority, some people must sacrifice...

Faced with such a result, no one expected. Where did it go wrong?

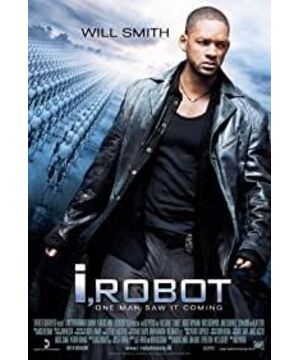

In the words of Will Smith, "The most attractive thing about "I, Robot" is that its central concept is that there is no problem with robots. Technology is not the problem itself. The limit of human logic is really the problem. In the end, we become ourselves. The worst enemy."

View more about I, Robot reviews