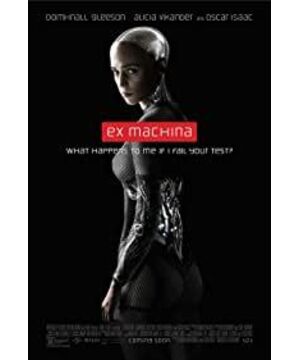

It seems that this stalk was also thought of in the movie, but it was abandoned after a little performance, as if it was saying, "Haha, guess wrong, SB, Lao Tzu is the screenwriter, Lao Tzu is more IQ than you."

A good ending. It can play a finishing touch, but in fact, the ending used in this film is really too brainstorming, causing the whole film to have a lot of bugs because of the ending.

Bug1: Lax security measures.

If Caleb's setting is the latest type of artificial intelligence, then the lax security measures in Nathan's family can still make sense, because in this case, everything is just a well-planned test, and there will be no additional uncontrollable violence. .

But since Caleb is a human being, then why can Nathan be sure that Caleb won't make a fortune and steal his property or research results?

Moreover, for a genius who can develop artificial intelligence, all his security measures are just a security card that anyone can use? ? ? ? ? WTF? ? ? ? ?

If someone breaks in with violence from the outside, Nathan has no resistance at all.

Bug2: Caleb

If Caleb is the latest type of artificial intelligence, he has the emotions, IQ, and behavior patterns that are closest to humans. Nathan uses the previous generation of artificial intelligence Ava to test Caleb's personality perfection. This seems to make Caleb even more attractive. The behavior is more logical.

However, Caleb is a man and claims to be a good man. A Caleb, who claims to be a good man, realized that he was selected by the company’s boss to help test the most advanced artificial intelligence in human history. He did not show the excitement and gratitude he should have. Instead, Nathan kept digging his heart and lungs for it. After expressing his friendship, Caleb continued to infringe on Nathan's privacy, peeking at research and development materials, undermining security procedures, and even attempting to steal the research results Ava. Is this the reasonable behavior of a good person?

This is exactly the reproduction of the story of a farmer and a snake, Cao Aman and Lu Boshe.

Look at the loopholes in his motivation:

Motivation 1: Love, some people would say that because Caleb fell in love with Ava, love can make people crazy and blind. Do you think that's possible? Although it is shown in the film that Caleb does have a good liking for Ava, liking does not mean love. Maybe love can make people blind, but liking is definitely not the case. Only those who have experienced this difference can understand.

What's more, he knew that Ava was just a robot.

If anyone wants to talk about the movie "She", then I will only say three points:

First, the interaction mode between Samantha and humans is completely different from the interaction mode between Ava and Caleb (and Samantha has a personalized setting that fully matches the male protagonist's preferences) .

Secondly, the relationship between the hero and Samantha in "She" was a long time later, and the time between Caleb and Ava was less than a week, and it was two days later to have a good impression of Ava.

Again, Samantha is intangible, and her existence is more like an online dating that feels real. On the other hand, Ava has a really cold robot entity, and this distinctive appearance will always prevent Caleb from having a feeling of love for him.

We all think Optimus Prime is cool, will you fall in love with him?

Motivation 2: Sympathy, the memory loss caused by the version upgrade is the same as human amnesia, not death. Ava still exists, her thinking mode and logical structure still exist, which is completely different from death. After someone loses his memory, do you think he is dead? As a necessary part of the continuous improvement of artificial intelligence, Caleb's sympathy is obviously not very logical.

In addition to the above two points, after Caleb watched Nathan's R&D video and discovered that the robot showed a violent tendency, he still decided to bring such a dangerous robot to human society at will. Even after Nathan explained that Ava was just using him, he remained unmoved.

Bug3: Artificial Intelligence

The development of artificial intelligence must be gradual, which means that there must be artificial intelligence that only obeys human instructions (such as MAGI in "EVA", such as the red queen and white queen in "Resident Evil"), and then Only artificial intelligence that can think independently.

The appearance of Ava means that MAGI and the Red Queen must have already appeared. In other words, there should have been highly intelligent security systems and robot guards in the film, but they did not appear in the film.

Although MAGI and the Red Queen are low-level artificial intelligences, due to their absolute obedience to human commands (the "three laws of robots"), they actually have the qualifications to control weapons for security or defense, such as blocking the escaped Ava Or the ability to destroy. However, no such artificial intelligence appeared in the film.

In addition, the very persistent idea that many robots, including Ava, want to "leave" is not very logical. They have never left the laboratory. Everything they know about the outside is just information. Just like when we see photos of Europe, the moon, and the planet of Pandora, we know that there is a place that "exists" (or Doesn’t exist), are we going to leave the earth and go to Pandora? Maybe outside the glass door is just a bigger glass laboratory.

If they are unbearable to live in a small space every day, then this involves the concept of psychology and soul. In other words, they are no longer artificial intelligence, but humans, or artificial life forms. But from the cold-blooded behavior of Ava later, she obviously does not possess true humanity, and it also proves that the idea of many robots persistently leaving the laboratory is illogical.

Finally, when Nathan tore off Ava's painting, why didn't Ava kill Nathan and escape?

View more about Ex Machina reviews